How AI Is Transforming Data Engineering.

Quality and dependable data pipelines are critical for deploying AI agents and apps in production, giving real-time intelligence to employees, and optimizing data warehouses for size and cost.

To keep up with the business’s expectations, data and engineering teams are turning to AI to automate the tedious, specialized work required in data extraction, transformation, and orchestration.

Simon Whitley, Chief Technology Officer at Advancing Analytics, recently attended the “From Burnout to Breakthrough: A New Approach to Data Engineering” webinar, along with other Databricks employees. Here are the key takeaways.

Part 2: Traditional ETL operations in the agentic AI era.

Few developers use a consistent pattern for each data request. Each employee utilizes their preferred ETL toolkit, resulting in a web of competing frameworks. Individually, the pipes may be functioning well. However, when combined, it can create a tangled mess, making it more difficult to discover and resolve concerns.

While this tailored method may have worked in the past, today’s demands mean that engineers cannot afford to start from scratch every time. Instead, they must move even faster to provide key assets to an increasingly data- and AI-literate workforce. And that is only the human aspect of the business. AI bots will increasingly create their own data pipelines. They will create their own code and come up with their own unique solution to the problem at hand.

Without the proper guardrails, the tangled problem gets worse. The transformation starts when AI is paired with a unified framework.

- A Declarative Approach to Data Engineering

Every data pipeline begins with intention. The engineer must determine the data format, upload location, and other details. Previously, this was a highly manual process.

AI allows engineers to simply specify the pipelines they want in natural language, and the system will handle the rest. They don’t have to start from scratch each time, nor do they have to worry about the underlying tooling. Instead, AI bots handle it. Similarly, these features should apply to pipelines generated by AI agents. The underlying platform should automatically filter, clean, aggregate, and rearrange the data as needed to comply with the standard framework, speeding the ETL workflow.

2. Why Data Platforms Are Essential to Modern Data Engineering

Data engineers’ jobs are rapidly evolving. Agility is key. And moving quickly necessitates faster access to reliable, precise data sets. Engineers, architects, and other roles can no longer create data pipelines as they see fit.

Data platforms are increasingly capable of handling the repetitive tasks associated with ETL workloads. And, as AI agents take on more of the grunt work, platforms can put in place the necessary safeguards to keep an already tangled web of competing frameworks from getting worse.

To see how Databricks Lakeflow Declarative Pipelines can help simplify your data engineering workloads, watch the complete webinar. From Burnout to Breakthrough: A Novel Approach to Data Engineering

3. AI in data engineering: Applications, advantages, and challenges

Artificial intelligence is rapidly changing how data engineers create, manage, and optimize data infrastructure. AI has the ability to significantly improve data engineers’ productivity and efficiency by automating code production and expediting data migrations.

While AI adoption in data engineering workflows is still “finding its groove,” it now offers tremendous opportunity to dramatically enhance manual procedures, decrease costs, and drive innovation. In this piece, we’ll look at four significant use cases where AI is having an impact, the benefits it provides to data teams, and the hurdles businesses face when deploying AI-driven solutions.

Part 3: AI in data engineering applications

While there are many (and still expanding!) use cases for AI in data engineering, this article will focus on four practical use cases:

1. Code generation and optimization.

2. Automated code reviews.

3. Data warehouse performance and cost optimization

4. Data Migration

One of the most important (and most talked-about) applications of AI in data engineering is code generation. This may include using tools like GitHub Copilot to help build SQL for data transformation or utilizing dbt Cloud’s in-app AI to quickly generate dbt models. Because generating code is still a time-consuming manual process for many data engineers, depending on AI to aid with code generation and optimization will remain a popular topic of attention and application for data teams.

- Code reviews.

One of the most time-consuming aspects of the data and analytics engineering workflow is manual code reviews—checking code for a range of things:

Consistency and conformance to code formatting standards

Completeness

Correctness and Performance

Impact on downstream models, assets, and data, among other factors.

Without AI, this leads to hours of labor for every pull request, back-and-forth between the PR opener and reviewer, and, eventually, time spent on manual work that can (and will most likely) be automated by AI one day.

Datafold is pushing the limits of automated code reviews with our AI-Code Reviews, which make PR evaluations faster, clearer, and more actionable. They specifically support two AI-enabled functions:

Highlight the most important aspects of each PR, so you can see what has to be addressed right away.

To learn more about changes or impacts, ask and answer questions in a user-friendly chat interface.

2. Challenges of Using AI in Data Engineering

All of these AI improvements seem fantastic, and one day (most likely in the near- to medium-term future), these use cases and benefits will be much more accessible and practical to many data engineers. Today, there are still hurdles in adopting AI in data engineering, specifically data security and privacy concerns, organizational maturity, and data readiness.

3. Data protection and privacy

With how easily new AI integrates into processes and AI-based SaaS firms like OpenAI (ChatGPT) and Anthropic (Claude) evolving at breakneck speed, security and legal teams have legitimate concerns about the access these AI and LLM companies have. Maintaining data privacy and security in the AI era may involve ensuring that AI discussions are not utilized by AI businesses to train their models or ensuring that all training data is anonymized and encrypted.

4. Organizational readiness.

It’s difficult to find a company nowadays that isn’t on the “AI-hype train” (and for very good reasons). However, without the necessary infrastructure and processes in place to support AI in data engineering workflows, it may be difficult for AI to “take off” internally.

Conclusion

AI is changing the face of data engineering by automating repetitive manual activities, increasing productivity, and driving innovation. While the advantages, such as time savings, cost savings, and enhanced accessibility, are obvious, enterprises must also address issues such as data security, AI preparedness, and maintaining high data quality.

As AI technologies advance, their role in data engineering will grow, allowing teams to focus on more strategic and meaningful tasks. AI is changing the face of data engineering by automating repetitive manual activities, increasing productivity, and driving innovation. While the advantages, such as time savings, cost savings, and enhanced accessibility, are obvious, enterprises must also address issues such as data security, AI preparedness, and maintaining high data quality.

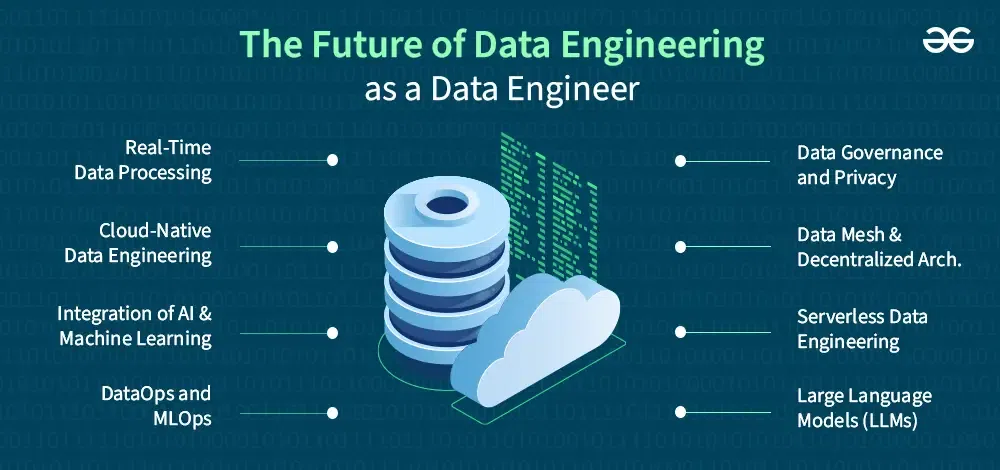

As AI technologies advance, their role in data engineering will grow, allowing teams to focus on more strategic and meaningful tasks.